When residual learning meets dense aggregation: Rethinking the aggregation of deep neural networks

Abstract

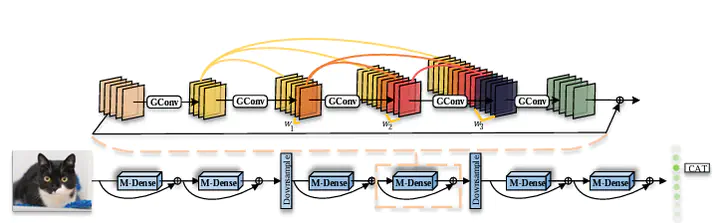

Various architectures (such as GoogLeNets, ResNets, and DenseNets) have been proposed. However, the existing networks usually suffer from either redundancy of convolutional layers or insufficient utilization of parameters. To handle these challenging issues, we propose Micro-Dense Nets, a novel architecture with global residual learning and local micro-dense aggregations. Specifically, residual learning aims to efficiently retrieve features from different convolutional blocks, while the micro-dense aggregation is able to enhance each block and avoid redundancy of convolutional layers by lessening residual aggregations. Moreover, the proposed micro-dense architecture has two characteristics, pyramidal multi-level feature learning which can widen the deeper layer in a block progressively, and dimension cardinality adaptive convolution which can balance each layer using linearly increasing dimension cardinality.